For the robotart.org competition, with a ton of help, I’ve gotten a 6 axis arm to work (it accepts x,y,z coordinates).

I configured openCV on ubuntu like so: http://www.samontab.com/web/2014/06/installing-opencv-2-4-9-in-ubuntu-14-04-lts/

(has a few steps tacked onto the official linux installation instructions to hook everything up properly, which is explained on the site and I’ll just make a quick note of below:

sudo gedit /etc/ld.so.conf.d/opencv.conf

-

/usr/local/lib

sudo ldconfig

-

PKG_CONFIG_PATH=$PKG_CONFIG_PATH:/usr/local/lib/pkgconfig

-

export PKG_CONFIG_PATH

) (openCV takes up a decent chunk of space!)

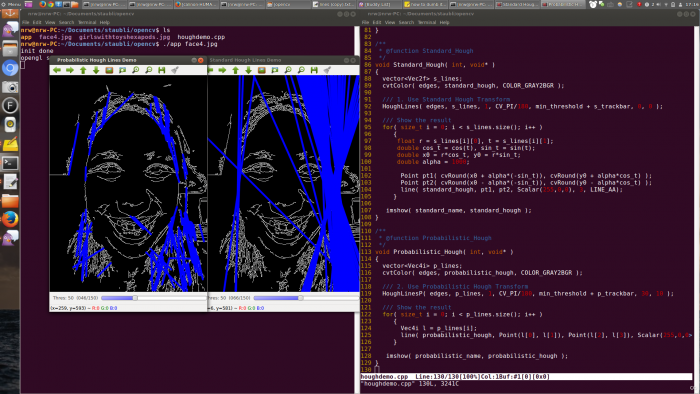

I haven’t used C++ before, though I’ve used C. To run some examples, such as this hough transform:

https://github.com/Itseez/opencv/blob/master/samples/cpp/tutorial_code/ImgTrans/HoughLines_Demo.cpp

$ g++ houghdemo.cpp -o app `pkg-config --cflags --libs opencv`

This creates a file called “app” which can be run like so:

$ ./app face4.jpg

Man, canny edge detectors on faces look super creepy and not at all recognizable. Also, I don’t think Hough is the way to go for recognizable faces.

Alright, so what are some alternative approaches?

stroke-based approach?

I started looking into the general problem of “stroke-based approaches” which will probably be useful later. Here is a good SIGGRAPH paper from 2002 I was skimming through, supplementing with youtube videos when I got confused:

http://web.cs.ucdavis.edu/~ma/SIGGRAPH02/course23/notes/S02c23_3.pdf

http://stackoverflow.com/questions/973094/easiest-algorithm-of-voronoi-diagram-to-implement

I found this comparison of lines only versus allowing different stroke widths (still one color) very compelling:

http://www.craftsy.com/blog/2013/05/discover-the-secrets-to-capturing-a-likeness-in-a-portrait/

It would be interesting to get different stroke widths involved. I wonder what the name of the final photoshop filter (that reduced the image to simple shapes) isInkscape’s vector tracing of bitmaps is (according to the credits) based on Potrace, created by Peter Selinger:

Alright, not quite doing the trick..

portraits

Okay, there’s this sweet 2004 paper “Example-Based Composite Sketching of Human Portraits” that Pranjal dug up.

http://grail.cs.washington.edu/wp-content/uploads/2015/08/chen-2004-ecs.pdf

They essentially had an artist generate a training set, then parameterized each feature of the face (eyes, nose, mouth), and had a separate system for the hair. The results are really awesome, but to replicate them, I’d have to have someone dig up 10 year old code and try to get it to run; or generate my own training set, wade into math, and code it all by myself.

Given my limited timeframe (I essentially have two more weeks working by myself), I should probably focus on more artistic and less technical implementations. Yes, the robot has six axis, but motion planning is hard and I should probably focus on an acceptable entry or risk having no entry at all.

Stippling

An approach that would be slow but would probably capture the likeness better would be to get stippling working. Evil Mad Scientist Labs wrote a stippler in Processing for their egg drawing robot, which outputs an SVG.

http://www.evilmadscientist.com/2012/stipplegen2/

http://wiki.evilmadscientist.com/StippleGen

Presumably the eggbot software converts the svg to gcode at some point.

http://wiki.evilmadscientist.com/Installing_software

If I can’t figure out where there generator is, or want to stick to python, there seems to be PyCAM

- http://pycam.sourceforge.net/

- Makerbot Unicorn Inkscape Plugin http://www.thingiverse.com/thing:5986

- Inkscape Gcodetools http://www.cnc-club.ru/forum/viewtopic.php?t=35

- MakerCAM http://www.shapeoko.com/wiki/index.php/MakerCAM

Todo

- http://infoscience.epfl.ch/record/60068/files/calinon-HUMANOIDS2005.pdf

- Work on the actual brush part (manipulator, palette)

- Multiple colors?

- Think about what I want to convey artistically. What would look nice yet be easy to implement?

Calligraphy

First, inspiration. 250 year old writer automaton, you can swap out cams to change the gearing and change what it writes. Crazy!

okay, so more modern. this robot arm uses the Hershey vector fonts to draw kanji.

https://en.wikipedia.org/wiki/Hershey_fonts

Turns out there is an open source SVG font for Chinese (Hershey does traditional chinese characters, not simplified) so now I can write messages to my parents :3 if I can convert the svg to commands to the robot.

https://en.wikipedia.org/wiki/WenQuanYi

Haha, there is even a startup that writes notes for you…

http://www.wired.com/2015/02/meet-bond-robot-creates-handwritten-notes/

Evil mad scientist labs has done some work on the topic.

http://www.evilmadscientist.com/2011/hershey-text-an-inkscape-extension-for-engraving-fonts/

https://github.com/evil-mad/EggBot/

This seems tractable. The key step seems to be SVG to gcode. Seems like I should be able to roll my own without too much difficulty or else use existing libraries.

misc. videos

^– hi again disney research

impressive with just derpy hobby servos

and of course, mcqueen