yea, made some bolts — these are notes for myself (as is the rest of this blog). the tl;dr is https://makerworld.com/en/models/791309-flat-thumb-screw

backstory

Needed some 5/16” x 40mm (lol) bolts for furniture assembly recently. Lost the parts, found the parts, lost the bolts. Such is life. Debated buying off Amazon, suffered through the amazon interface for picking hardware (no, I actually want a 51/6, don’t give me results for 3/8), got chatgpt to give me incorrect info (no, they’re not dome head because i said “it’s more of a dome”, they’re button heads), pondered if it was worth $10 to save an hour of my time (it was, but man, $1 a bolt?) then decided heck it they’re not structural anyway. They’re just for keeping the feet mostly attached to the mostly stationary bookends of the sofa. (ehehe I got an experimental sofa where one end is a bookshelf and the other end is a sorta-laptop-able surface with two stools).

ok actually useful tidbit

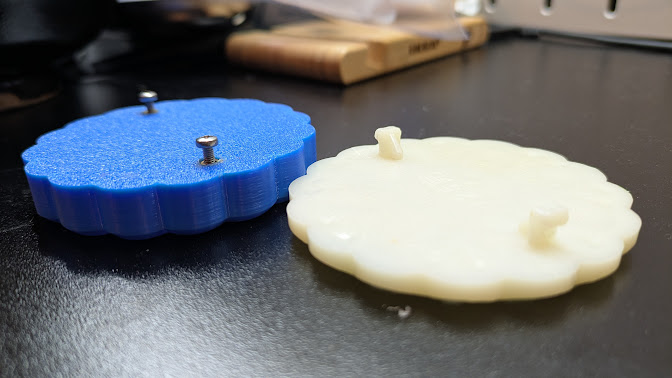

Found these flat-wise printed bolts so that the layer direction is in the “correct” direction and it doesn’t shear so easily.

https://makerworld.com/en/models/791309-flat-thumb-screw?

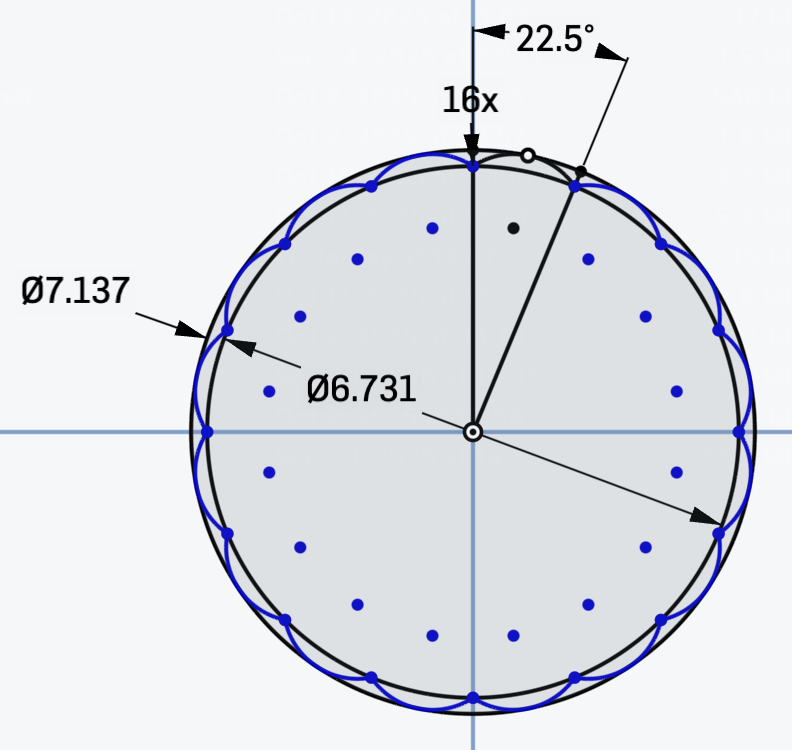

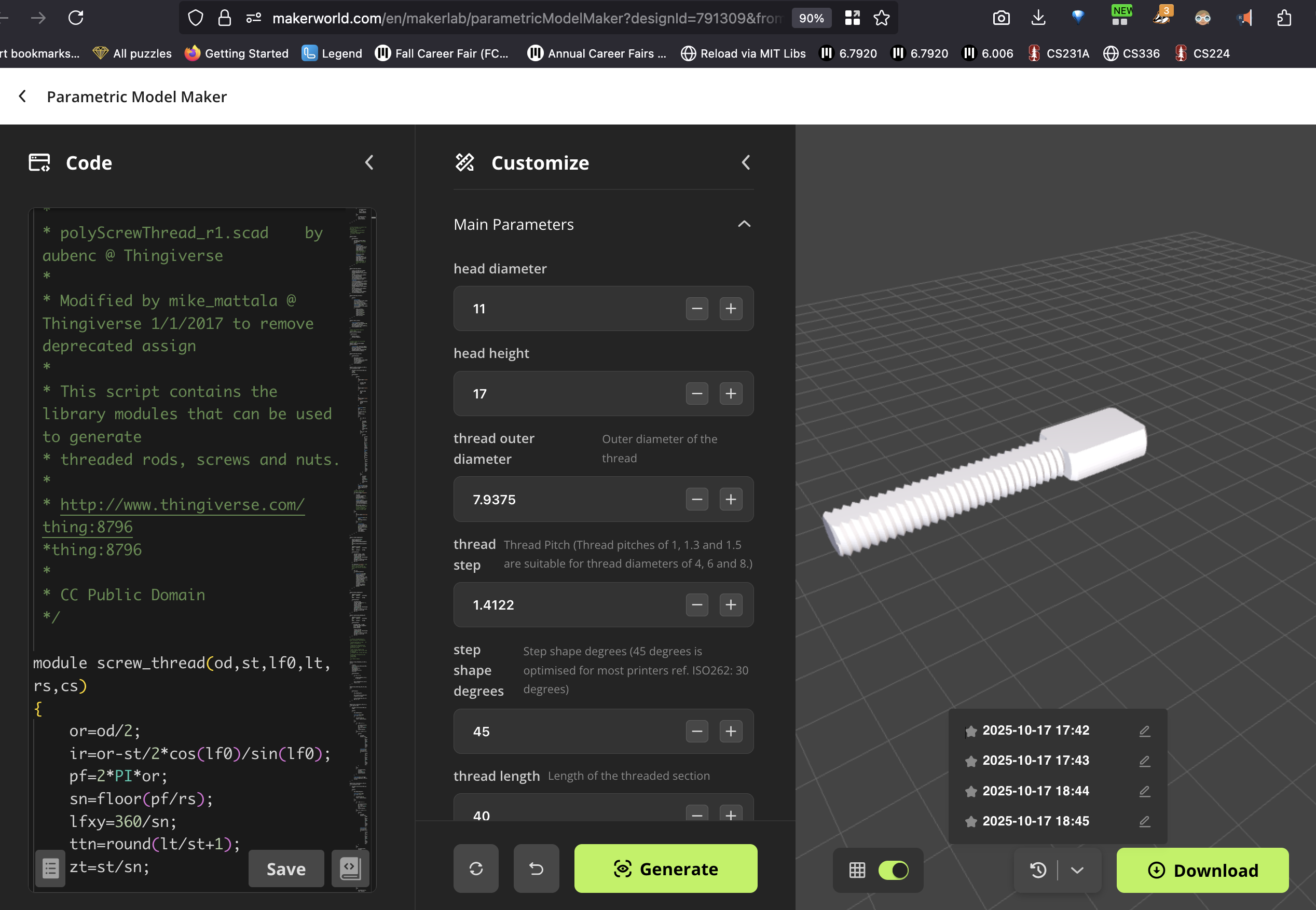

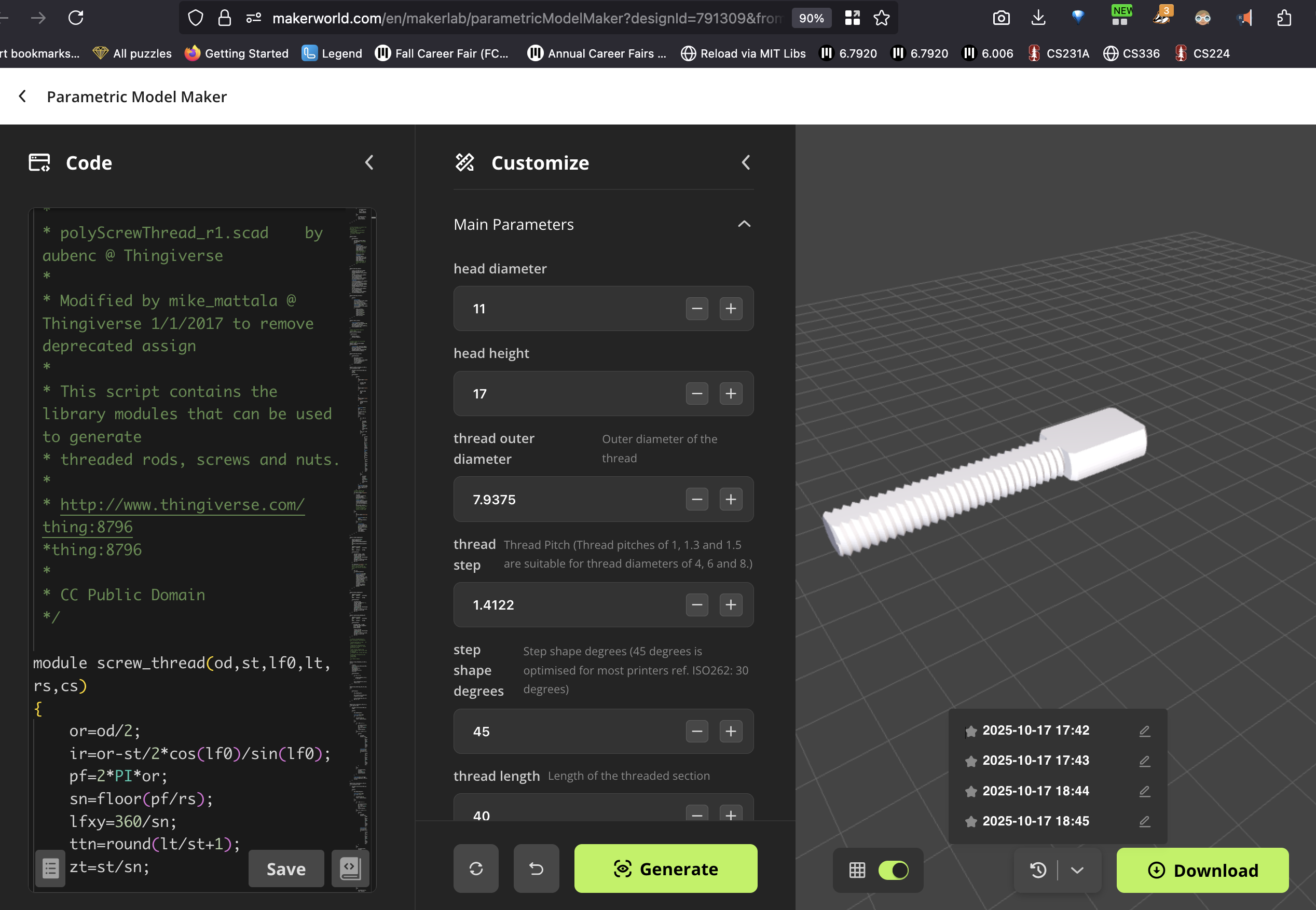

There’s really only two key parameters: thread outer diameter, which is 5/16 in to mm so 7.9375mm, and thread step, which (looking online – UNC coarse, the default vs. fine, is 18 threads per inch for 5/16”) is 1/18 in to mm is 1.4122.

side note

Note: the ones on the screenshot/the makerworld javascript app are off by one. I looked at the original source code to figure this out. Src on that says

//Thread step or Pitch (2mm works well for most applications ref. ISO262: M3=0.5,M4=0.7,M5=0.8,M6=1,M8=1.25,M10=1.5) — CC BY NC SA mike_linus thingiverse 2013 “Nut Job”.

which matches charts online.)

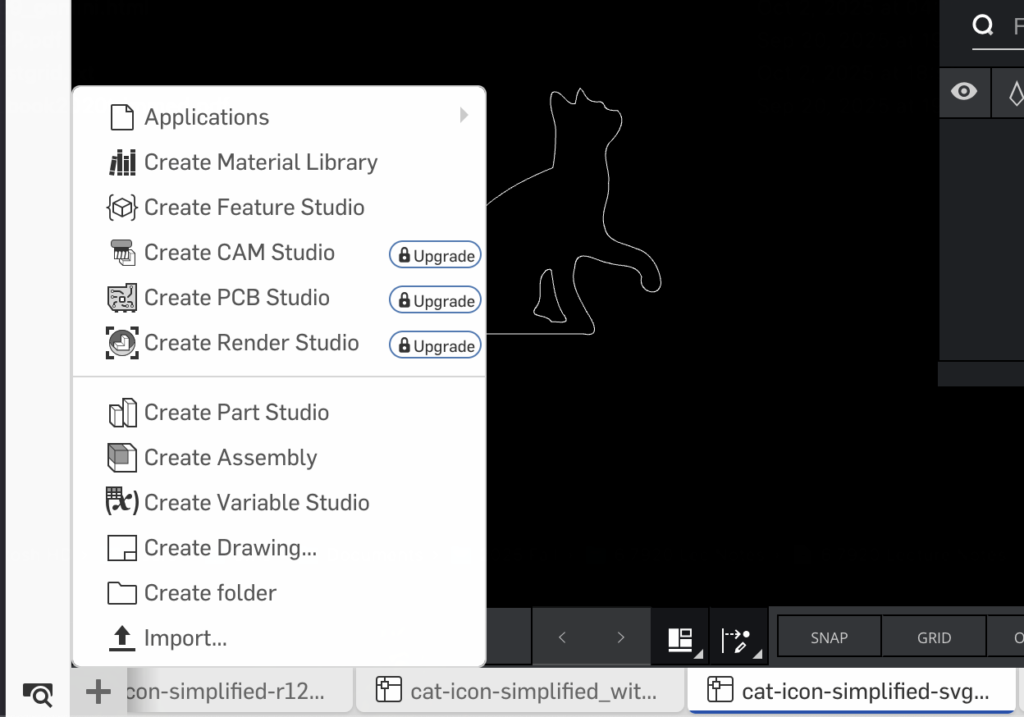

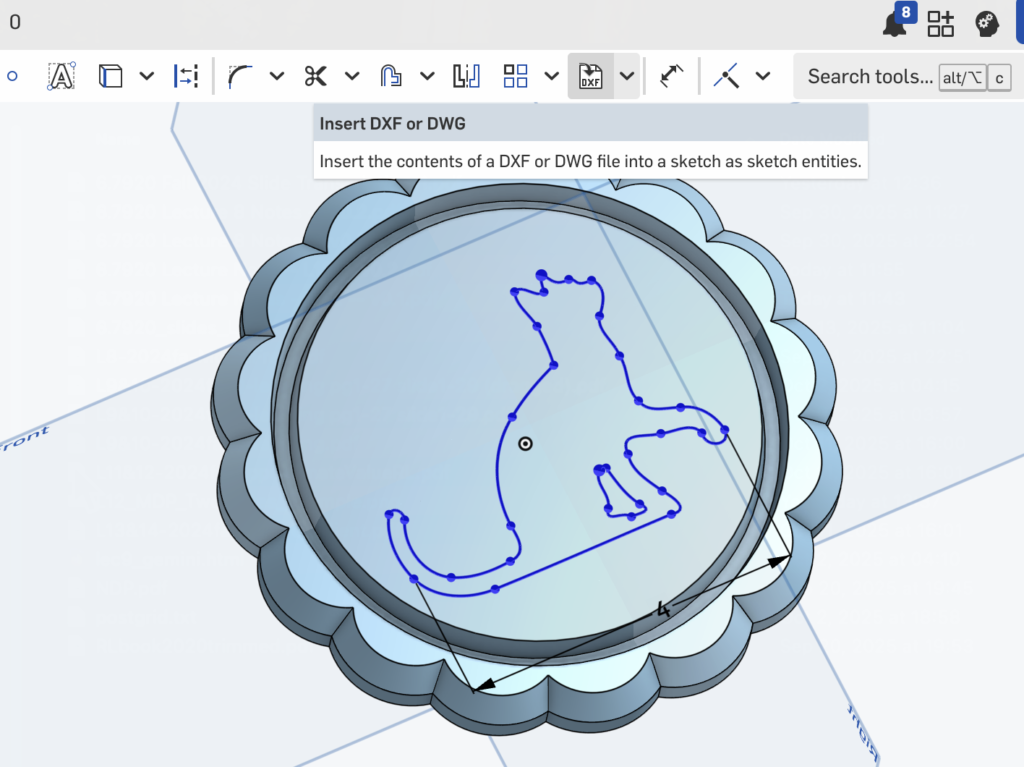

CAD source code online

Interesting to see the code wandering around for hardware. I always been reluctant to code what is inherently a 3d process.

to be fair, even a GUI on a flat screen was a struggle. I struggled to learn solidworks and it took a bit to learn to specify things based on shape rather than on how i would mark to make them by hand with a ruler and sharpie. Again the curse of “i should already know this” with an undertone of “otherwise people will realize i don’t know anything” (and stop being my friend?) despite being in the class to learn how to do it. ??? lol past and present me.

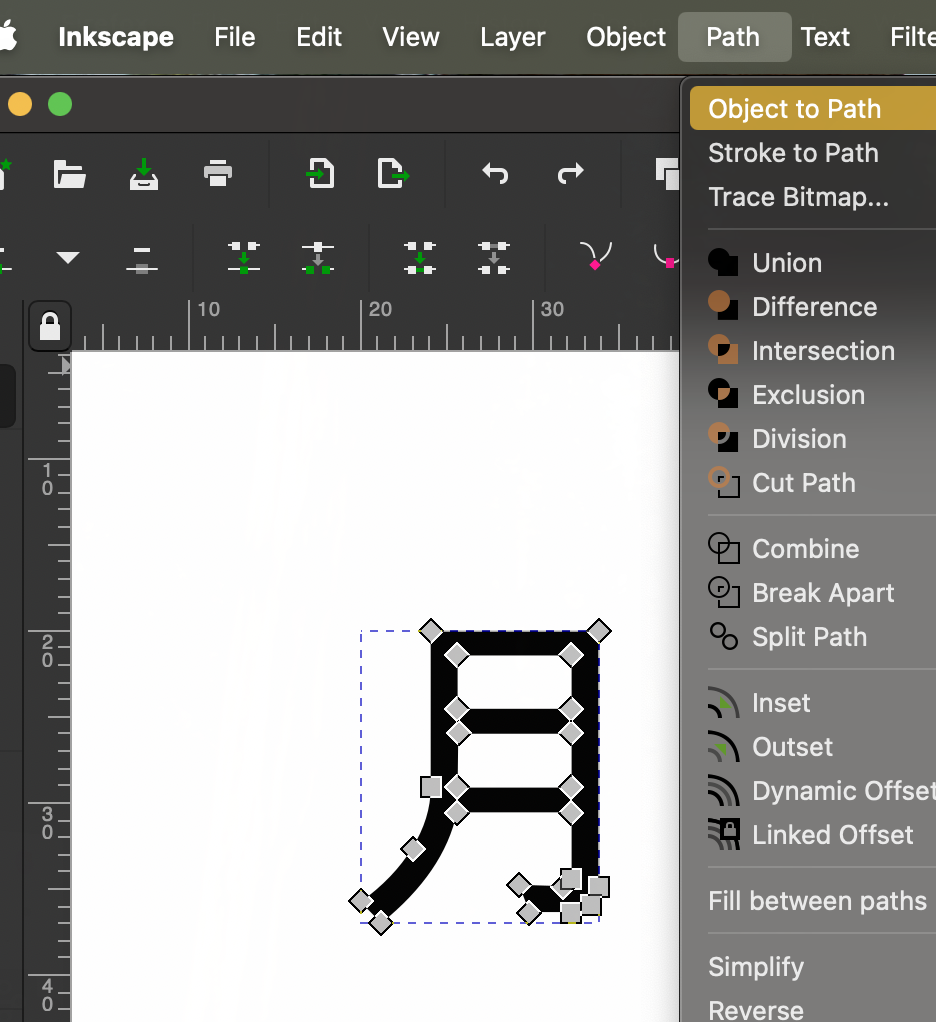

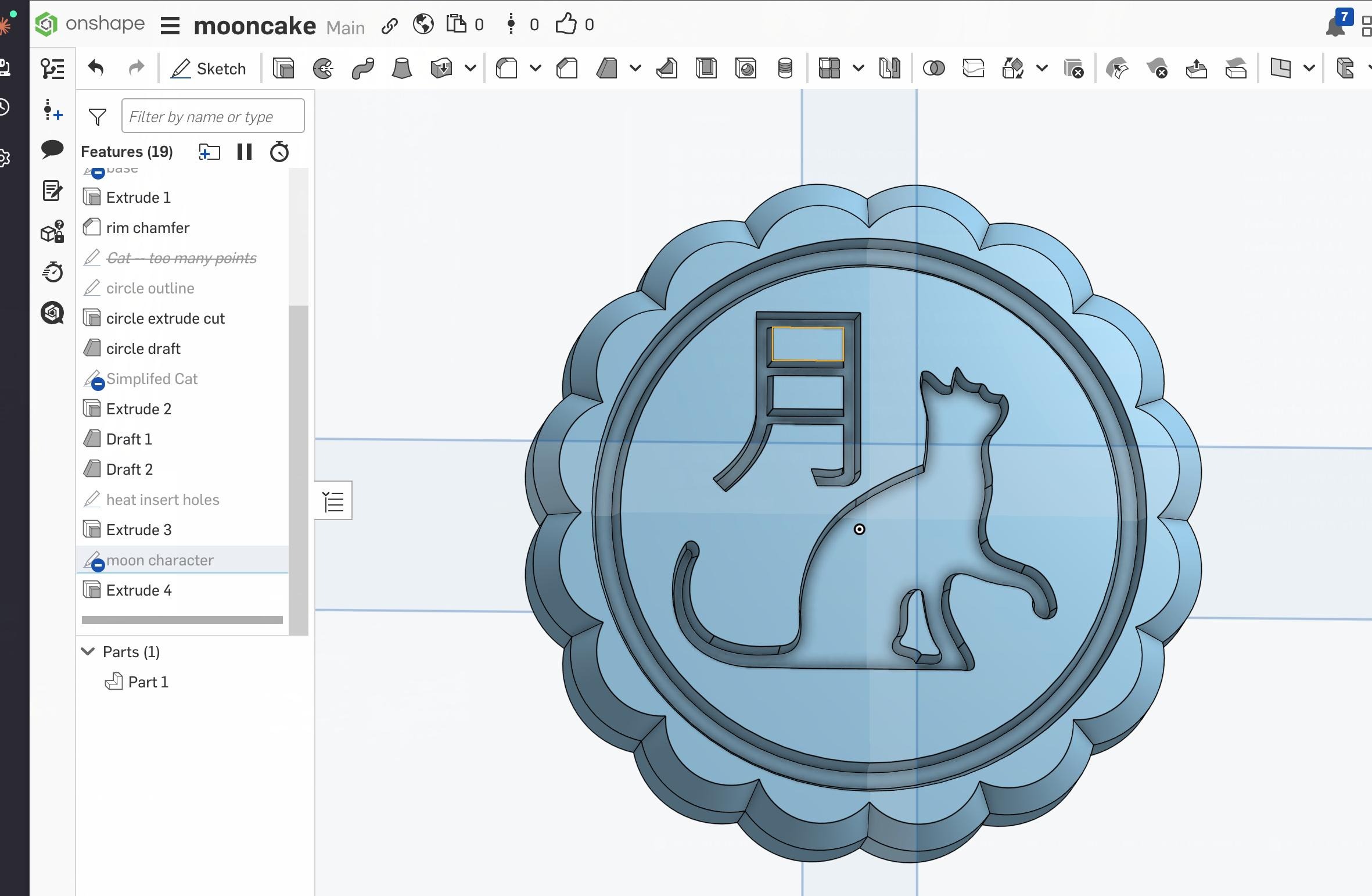

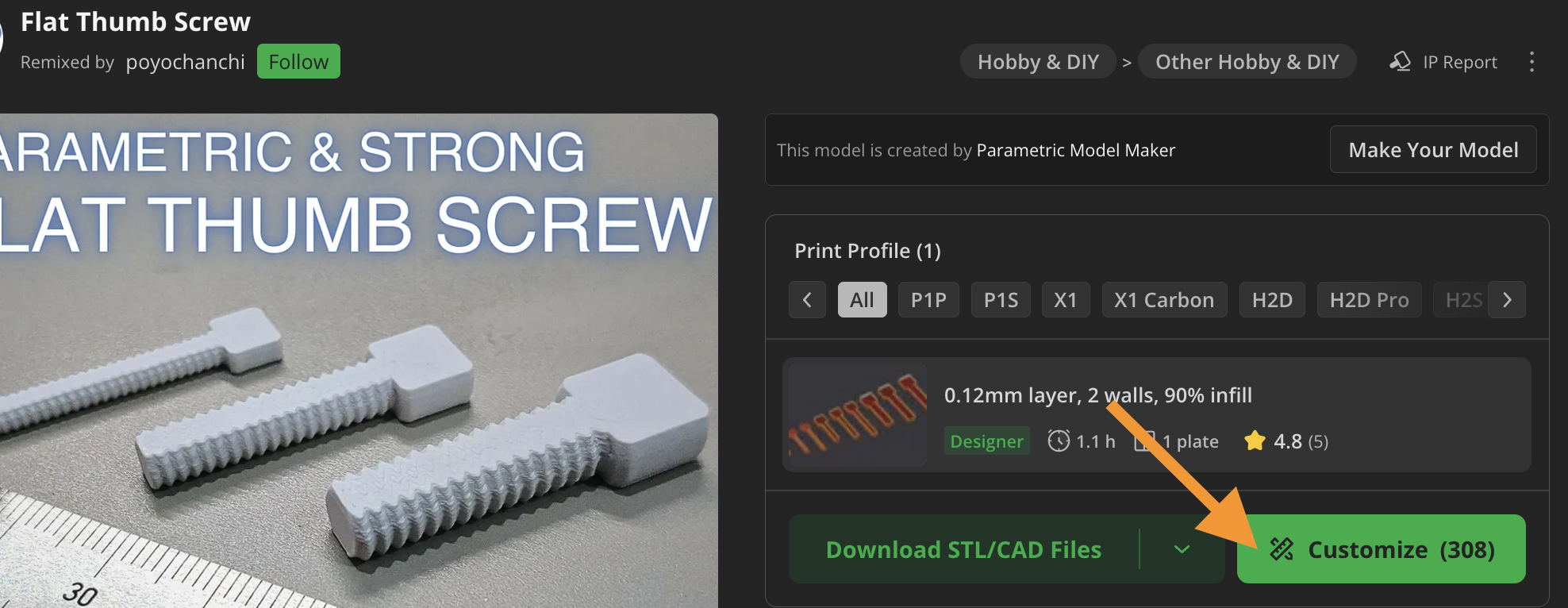

There’s this customize button .

This pops ups a window where you can even see the source code, and there’s version history too. Which is great since I accidentally lost the thread parameters but could just go back in time to it.

Is this not the dream of open source hardware? Look at this:

# It also uses the library polyScrewThread_r1.scad license: CC Public Domain, originally created by aubenc, modified by Mike_mattala.

other notable models

I think the idea of using woodscrews to reinforce inside bolts works well for this nonstructural purpose also. I dig it. https://makerworld.com/en/models/980896-collection-metric-bolts-reinforced-by-wood-screws?from=search#profileId-968781

the world of makerworld

Interestingly, the “boost” tokens (which are for free when you print models using a bambulab printer / makerworld model, but are like a decent amount of money — $1 each) I always used as a “hey thanks this was useful and saved me time”. But apparently they are for “ambitious” projects like CADing an entire wind model. But still, super neat way to convince people to make lots of models. It’s very smart — the general idea of some novel hardware, e.g. stepper motor control but most of the innovation and competitive advantage is in the software — the mobile app and the desktop site, sharing features, etc.

I wonder now that fiber lasers are a thing and once the cost for that comes down from $3k to $1k if we’ll start seeing a new era of sharing circuits as well. There are certainly companies making in-browser circuit CAD and design review a thing. Went to a career fair and there was a company there making github (eg pull requests) a thing for circuits (allspice).

Goals check

well, not doing great on my goals.

- turn thesis into paper: not even started

- hamster iot / mcup / pov yoyo: not even started

- learning algorithms: barely started, stalled out

been making lecture notes, but those are taking wayyyy too long. playing around with the llms for that. i guess it gets me out of writers block, i mostly have to rewrite everything.

need to update portfolio, and i want to learn well, everything. there’s really digging into tools like kicad and onshape and spice (instead of learning haphazardly). there’s refreshing on computer vision fundamentals, re-learning control theory, taking an llm from scratch class, and on top of that algorithms and i’m making progress on RL by sheer dint of having to make the lecture notes but i’m not actually getting any hands-on experience in implementing.

ah! somehow i’ve made funmployment stressful. and when it’s not stressful i am stressed that i should be stressed. and i’m still perpetually behind. heh.

proof that it is a real tree! so many leaves.

proof that it is a real tree! so many leaves. the birth grounds of our tree. all the trees are a flat cost so that people don’t cut down all the baby trees

the birth grounds of our tree. all the trees are a flat cost so that people don’t cut down all the baby trees the tree farm has this machine that wraps the tree and it makes it huggable !

the tree farm has this machine that wraps the tree and it makes it huggable !